The Big Data Fallacy And Why We Need To Collect Even Bigger Data

Editor Dr. Michael Wu is the Principal Scientist of Analytics at Lithium where he is currently applying data-driven methodologies to investigate and understand the complex dynamics of the social Web.

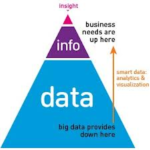

The value of any data is only as valuable as the information and insights we can extract from it. It is the information and insights that will help us make better decisions and give us a competitive edge. The promise of big data is that one could glean lots of information and gain many valuable insights. However, people often don’t realize that data and information are not the same. Even if you are able to extract information from your big data, not all of it will be insightful and valuable.

Data ≠ Information

Many people speak of data and information as if they are synonymous, but the difference between the two is quite subtle. Data is simply a record of events that took place. It is the raw data that describes what happened when, where, and how and who’s involved. Well, isn’t that informative? Yes, it is!

While data does give you information, the fallacy of big data is that more data doesn’t mean you will get “proportionately” more information. In fact, the more data you have, the less information you gain as a proportion of the data. That means the information you can extract from any big data asymptotically diminishes as your data volume increases. This does seem counterintuitive, but it is true. Let’s clarify this with a few examples.

Example 1: Data backups and copies. If you look inside your computer, you will find thousands of files you’ve created over the years. Whether they are pictures you took, emails you sent, or blogs you wrote, they contain a certain amount of information. These files are stored as data in your hard drive, which takes up a certain amount of space.

Now, if you are as paranoid as I am, you will probably back up of your hard drive regularly. Think about what happens when you back up your hard drive for the first time. In terms of data, you’ve just doubled the amount of data you have. If you had 50 GB of data in your hard drive, you would have 100 GB after the back up. But will you have twice the information after the back up? Certainly not! In fact, you gain no additional information from this operation, because the information in the backup is exactly the same as the information in the original drive.

Although our personal data is not big data by any means, this example illustrates the subtle difference between data and information, and they are definitely not the same animal. Now let’s look at another example involving bigger data.

Example 2: Airport surveillance video logs. First, video files are already pretty big. Second, closed-circuit monitoring systems (CCTV) in an airport are on 24/7, and high-definition (HD) devices will only increase the data volume further. Moreover, there are hundreds and probably thousands of security cameras all over the airport. So as you can see, the video logs created by all these surveillance cameras would probably qualify as big data.

Now, what happens when you double the number of camera installations? In terms of data volume, you will again get about 2x the data. But will you get 2x the information? Probably not. Many of the cameras are probably seeing the same thing, perhaps from a slightly different angle, sweeping different areas at slightly different times. In terms of information content, we almost never get 2x. Furthermore, as the number of cameras continues to increase, the chance of information overlap also increases. That is why as data volume increases, information will always have a diminishing return, because more and more of it will be redundant.

A simple inequality characterizes this property: information ≤ data. So information is not data, it’s only the non-redundant portions of the data. That is why when we copy data, we don’t gain any information, even when the data volume increases, because the copied data is redundant.

Example 3: Updates on multiple social channels. What about social big data, such as tweets, updates, and/or shares? If we tweet twice as often, Twitter is definitely getting 2x more data from us. But will Twitter get 2x the information? That depends on what we tweet. If there is absolutely zero redundancy among all our tweets, then Twitter will have 2x the information. But that typically never happens. Let’s think about why.

First of all, we retweet each other. Consequently, many tweets are redundant due to retweeting. Even if we exclude retweets, the chance that we are coincidentally tweeting about the same content is actually quite high, because there are so many tweeters out there. Although the precise wording of each tweet may not be exactly the same, the redundancies among all the tweets containing the same Web content (whether it’s a blog post, a cool video, or breaking news) is very high. Finally, our interest and taste in good content remains fairly consistent over time. Since our tweets tend to reflect our interests and tastes, even apparently unrelated tweets from the same user will have some redundancies, because the tweeter is tweeting similar content.

Clearly, even if we tweet twice as often, Twitter is not going to get 2x the information because there is so much redundancy among our tweets (likewise with updates and shares on other social channels). Furthermore, we often co-syndicate content across multiple social channels. Since this is merely duplicate content across multiple social channels, it doesn’t give us any extra information about the user.

Although data does give rise to information, data ≠ information. Information is only the non-redundant parts of the data. Since most data, regardless of how it is generated, has lots of built-in redundancy, the information we can extract from any data set is typically a tiny fraction of the data’s sheer volume.

I refer to this property as the data-information inequality: information ≤ data. And in nearly all realistic data sets (especially big data), the amount of information one can extract from the data is always much less than the data volume (see figure below): information << data. Since the naïve assumption that big data leads to a lot of information is not true, the value of big data is hugely exaggerated.

Information ≠ Insights

Although the amount of information we can extract from big data may be overrated, the insights we can derive from big data may still be extremely valuable. So what is the relationship between information and insights? All insights are information, but not all information provides insights. There are three criteria for information to provide valuable insights:

1. Interpretability. Since big data contains so much unstructured data and different media as well as data types, there is actually a substantial amount of data and information that is not interpretable. For example, consider this sequence of numbers: 123, 243, 187, 89, and 156. What do these numbers mean? It could be the number of likes on the past five articles you read on TechCrunch, or it could be the luminance level of five pixels in a black and white image. Without more information and meta-data, there is no way to interpret what these numbers mean. Since data and information that are not interpretable won’t offer you any insights, insights must lie within the interpretable parts of the extractable information.

2. Relevance. Information must be relevant to be useful and valuable. Relevant information is also known as the signal, so irrelevant information is often referred to as noise. But relevance is subjective. Information that is relevant to me may be completely irrelevant to you, and vice versa. This is what Edward Ng, a renowned mathematician, means when he says “One man’s signal is another man’s noise.” Furthermore, relevance is not only subjective, it is also contextual. What is relevant to a person may change from one context to another. If I’m visiting NYC next week, then NYC traffic will suddenly become very relevant to me. But after I return to SF, the same information will become irrelevant again. Therefore, insights

are an even smaller subset within the relevant information (i.e. signals), which is already a tiny subset of the interpretable information.

3. Novelty. Information must be novel to be insightful. That means it must provide some new knowledge that you don’t already have. Clearly this criterion is also subjective. Because what I know is very different from what you know, what is insightful to me may be old information to you, and vice versa. Part of this subjectivity is inherited from the subjectivity of relevance. If some information is irrelevant to you, then most likely you won’t know about it, so when you learn it, it will be new. But you probably wouldn’t care because it’s irrelevant. Even if it is novel, it’s of no value to you.

However, once an insight is found, it’s no longer new and insightful the next time you have it. Therefore as we learn and accumulate knowledge from big data, insights become harder to discover. The valuable insight that everyone wants is a tiny and shrinking subset of the relevant information (i.e. the signal). If the information fails any one of these criteria, then it wouldn’t be a valuable insight. So these three criteria will successively restrict insights to an even tinier subset of the extractable information from big data (see figure). So the big data fallacy can be summarized by a simple inequality: insight << information << data.

The value of big data is hugely exaggerated, because insight (the most valuable aspect of big data) is typically a few orders of magnitude less than the extractable information, which is again several orders of magnitude smaller than the sheer volume of your big data. I’m not saying big data is not valuable, it’s just overrated, because even with big data, the probability for finding valuable insights from it will still be abysmally tiny. The big data fallacy may sound disappointing, but it is actually a strong argument for why we need even bigger data. Because the amount of valuable insights we can derive from big data is so very tiny, we need to collect even more data and use more powerful analytics to increase our chance of finding them. Although big data cannot guarantee the revelation of many valuable insights, increasing the data volume does increase the odds of finding them.

Category: Uncategorized